* and/or Editing

It seems curious that so rarely we stop to reflect on the word processing software and other digital tools of writing we use every day, given how much work they do for us. Or how much work they do for our students – I’m thinking of you, teachers of writing.

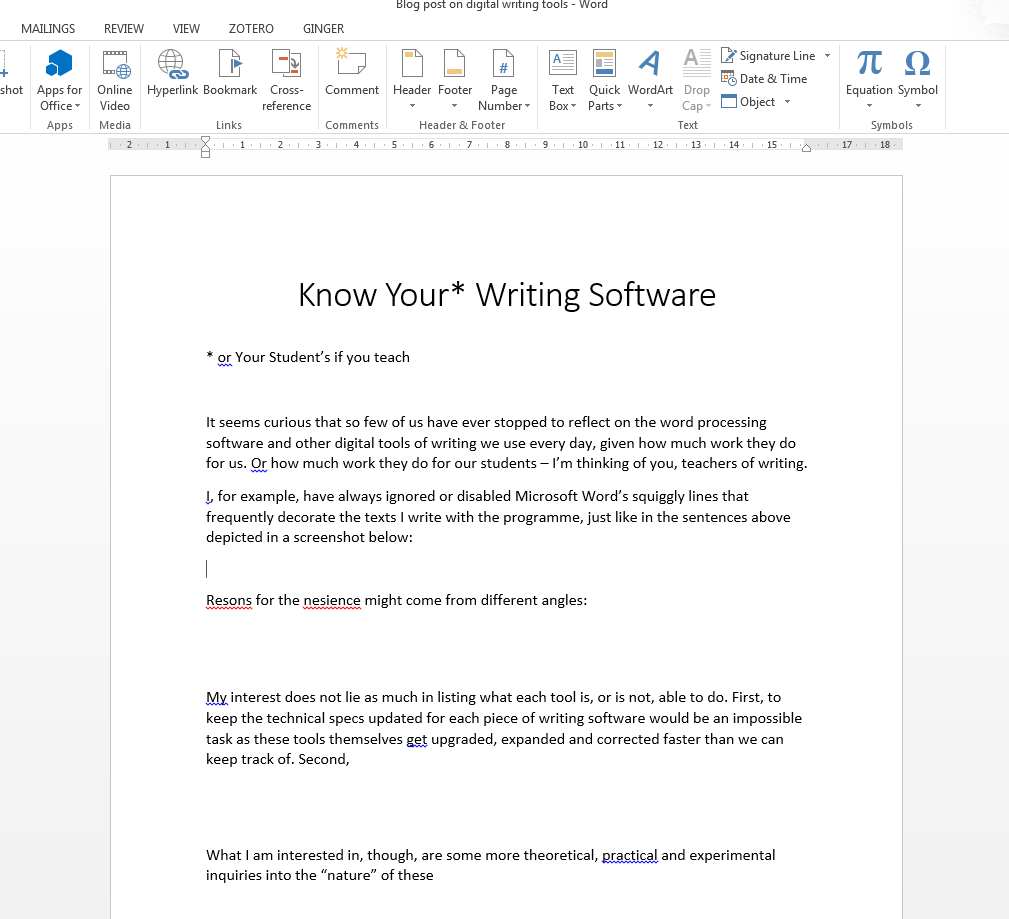

I, for example, used to ignore or disable Microsoft Word’s squiggly underlines that frequently decorated the texts I wrote with it, just like in the sentences above, captured in a screenshot below:

Much like dealing with spam in online environments, I accepted it being there without giving it much attention. My response to the programme’s automatic suggestions became automatic: ignore.

There were different reasons for my deliberate nesience (and how about yours?). I was suspicious of any suggestions given by MS Word’s Grammar Checker (MSGC)[1] because,

- I had seen it be wrong in the past;

- I do not like being told what to do – especially by a machine;

- “I know my grammar” and am a confident writer/editor;

- I like to break rules;

- I had never asked MS Word to check my writing (it’s turned on in the software by default);

- it doesn’t have good-enough knowledge of my native tongue (Latvian), which makes me a bit grumpy;

- it failed to recognize some fancy proper names (thinkers and writers whom I aspire to);

- and so on.

Also, I simply never bothered to venture into exploring unfamiliar areas – like advanced options and settings of a programme – unless I had to. You just get by with stuff that’s placed in front of you.

Our invisible, ubiquitous co-writers

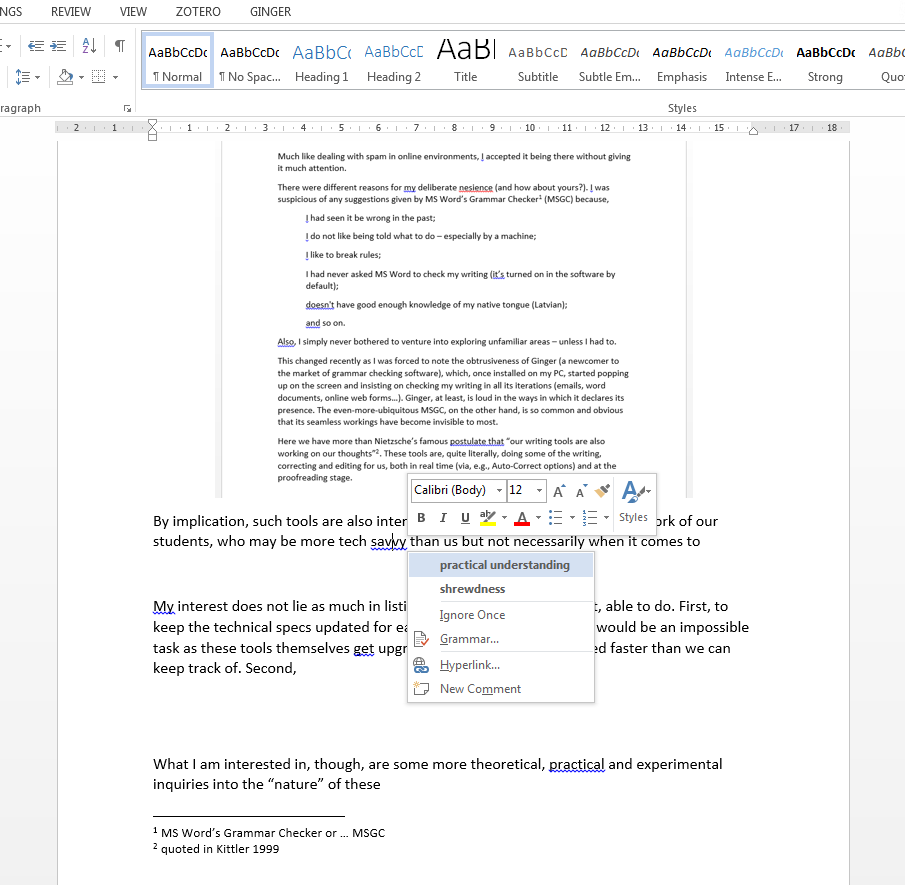

This changed recently by the obtrusiveness of Ginger, a promising relative newcomer to the market of grammar checking software. Once installed on the PC, it would not stop popping up on my screen and commenting on my writing in all its iterations (emails, word documents, online web forms…). Ginger, at least, declares its presence loudly. The even-more-ubiquitous MSGC, on the other hand, by now is so common and obvious to us that its seamless workings have become invisible.

The thing is, here we face something more than Nietzsche’s famous postulate, “Our writing tools are also working on our thoughts.”[2] The 21st century writing tools are, quite literally, doing some of the writing, correcting and editing for us, both as we type (via Auto-Correct options) and, more recursively, as we write-and-revise (responding to those squiggly lines).

By implication, such tools are also intervening into and shaping the written work of our students, who may be more tech-savvy than us but not necessarily more informed and confident when it comes to evaluating writing (and evaluating how well, or not, a piece of software does that). As Professor of English and language historian Anne Curzan notes in her most recent book Fixing English, the ubiquity and “the assumed authority” of a tool like MS grammar checker make it “arguably the most powerful prescriptive language force in the world at this point.” Now, that does not mean its actions should not be monitored or questioned. Quite the opposite. But the suggested anonymous authority of the tool means that not only students but also many among the staff take its corrections at face value.

Ask important questions

Software tools may problematize our students’ engagement with writing in ways we have not conceived before. Joe Pinsker and Nicholas Carr, for example, would argue that relying on automated external writing assistance obstructs rather than assists student learning of grammar. The more the machine corrects something for us, the more oblivious we become to the “mistake”: “Our brains seem to become less vigilant when we know a grammatical safety net will catch us” (Pinsker). Essentially, relying on a piece of software to “fix” our writing makes us complacent and “turns us from [language] actors into observers.” (Carr)

Nancy Bray and Joohee Huh make a good point about the militant linearity of most word processing software (MS Word, Open Office Writer), which does not allow much space for the early stages of writing (brainstorming, mindmapping, scribbling, drafting). If you or your student is a novice writer, or a non-linear writer, like myself, a common word processor will not be the best tool for beginning. After all, the standard-setting tool, the MS Word, was designed for office use, for efficiently writing up and formatting business reports.

MS Word, then, is the first writing tool that comes to mind, because, in the much-cited words of Sandy Baldwin, “The world runs on Microsoft Word documents.” Then, the loud and heavily-advertised parade of boastful leaders in the market of language-checking software: Grammarly, Ginger, ProWriting Aid, White Smoke, etc. But there is plenty of potentially useful, weird and fun stuff too, available (mostly) freely online, whose features could likewise easily be misused or abused by inattentive writers: TwinWord Writer’s Tone Analyzer, for example, or the named Ginger Software’s Sentence Rephraser, etc.

Would your students (and would you) know whether MS Word’s grammar checker is correct when it insists on correcting, for example, a split infinitive with two or more words in-between (e.g., “to very badly want”)? (That remains a matter of style. The end of era of hunting for split infinitives was officially announced by a new version of Oxford dictionary in 1998.)

Scholars such as Alex Vernon, Tim McGee and Patricia Ericsson, and Anne Curzan suitably question the alleged authority behind the program’s grammar and style rules, which, more often than not, turn out to be absolutist, dated, inaccurate, unclear or simply “mystifying”. Furthermore, the MSGC, like students – and often also more mature writers – themselves, likes black-and-white clarity and definite answers, so will mark as an “error” even cases which simply belong to non-standard (but systematic) usage of English. The MSGC does not like that language is fuzzy, and always evolving. It does not accept that there are many Englishes. Are we, and are our students, aware of this when receiving the omnipresent grammarian’s rigid corrections?

Here are some of the important questions one could (and should) ask about digital writing tools, regardless of the tool’s functions:

But the most useful and important question could be this:

What can we, my students and myself, learn about our writing from digital writing tools?

It is any such tool’s capability to draw attention to particular aspects in one’s writing (be it grammar, readability or tone), and so to invite self-reflection, that holds the biggest pedagogical value. So use them to, above all, get to know your own writing, its weaknesses and strengths, its voice, tone and hesitant silences, its length and colour, and structure, its level of flexibility and ease… Used creatively with caution, diagnostic tools can be super helpful there. And “fixing” one’s writing becomes less import than knowing it better in order to deliberately construct the voice that you want.

Research more

Relatively speaking, very little research has been done on present-day digital writing tools. I am not aware, for example, on any scholarly work on such diagnostic tools of syntax-level readability and style as The Hemingway App or The Writer’s Diet. Both are great for drawing the writer’s attention to their writerly quirks (from them I have learned I tend to be quite adverbial and wordy, as this sentence also illustrates).

Most of international scholarly work appears to be devoted to automated essay-scoring tools, which I have ignored as it invites a discussion of its own and of a different kind. There are some interesting qualitative, and less interesting quantitative, inquiries on popular diagnostic or corrective writing tools, many of which investigate the tool’s usability for ESL students (which, again, is a slightly different topic). Either way, the bulk of available research findings is dated, since the functionality of tools discussed has since been developed beyond recognition or the tool, failing to evolve fast enough, has died a natural death.

That is also why it would be very difficult to present an up-to-date taxonomy of all existing tools (as was my initial ambitious goal). Digital writing software gets upgraded, redeveloped, morphed, expanded and corrected faster than anyone could possibly keep track of it. Also, some (not unnecessarily unbiased) developers of other writing tools have already made some basic comparisons (see the list of reading material below).

What I am interested in are more theoretical, practical and experimental inquiries into the “nature” of our digital co-writers and editors. What can we learn about various types of writing from our engagement with these tools? Will they be doing all the writing work for us soon? If so, what will that writing be like? And what does it mean “to write”, anyway?

So watch this website fill with more material as, throughout this year, I take on some of the tools and test out their affordances, both in work with students, as well as individually as a research writer.

Meanwhile, below are some interesting tools and reading materials to explore, as well as borrowed suggestions for exercises with students.

Diagnostic & corrective tools to explore

Useful exercises for work with students

Make your students pay attention to their writing/editing software by engaging them in the following activities, as suggested by Alex Vernon, Michael Milone, and Reva Potter and Dorothy Fuller, respectively. (Thanks to the authors for letting me cite from descriptions of their classroom activities.)

Activity 1, Rethinking Language Conventions and Microsoft Word (Vernon)

Observe “the inextricable link of the programs’ checking options with certain styles of writing.” Discuss “how rhetorical context determines grammar and style expectations and standards, and of how these language practices and standards aren’t absolute.” Discuss “what styles the word-processing software of our choice employs, the checking options it considers appropriate for that style, and the implications thereof.” Let students challeng[e] the assumption of a universal student/academic discourse – as if the conventions of business communication and poetry explication are the same…”

See Alex Vernon, “Computerized Grammar Checkers 2000: Capabilities, Limitations, and Pedagogical Possibilities,” Computers and Composition 17 (2000), 344-345)

Activity 2, Revising and Editing (Vernon)

- Ask students to brainstorm other possible checking options, other styles, and the checking options appropriate for that style.

- Have students, either individually or in small groups, articulate responses to program feedback to their own writing. Students could present their responses either in writing (in a separate document, or in annotations) or orally. Having students work together especially helps students decipher incorrect and misleading program feedback.

- Task students to run Grammar-as-you-go on a completed text and – since the GAYG function simply underlines suspect constructions – to address the flagged items without accessing the checker’s feedback (by clicking the right mouse button). An improved sentence makes the underlining go away. This is a version of the technique of Hull et al. (1987) [3] for marking the text without identifying the problem, thereby teaching students to learn to edit themselves. It also avoids the confusion of misleading feedback altogether.

- Focus on a particular issue by having students check their papers with only one checking option (or several options that look for the same essential issue), as described with “wordiness” above. Increase the number of issues checked as the semester progresses.

- Ask students to write “bad” sentences, either to successfully trigger the checker or fool it.

- Hold contests, pitting human checkers against one another and against the computer.

- Have the computer and the students independently search for subject-verb agreement errors.

- Compare the rules of the grammar checker with the discussions of the same issues in your writing handbook. If you choose an issue on which the sources disagree, you have instantly challenged any monolithic sense of language use students might possess, and have taken a solid step toward enabling students to analyze the rules, the rhetorical situation of their writing, and deciding for themselves (not to mention just getting the handbook off the shelf).

(Vernon, “Computerized Grammar Checkers 2000,” 345-346)

Activity 3, Modelling Engagement with Tools (Milone, as described by Vernon)

- Model the grammar checker editing process in front of the class via projection, and discuss why the computer flagged items, the teacher’s response to the program’s feedback, and the technical limitations of the program.

- Have groups of two or three students analyse student texts and grammar checker feedback.

- Have students enable only certain checking options to focus on particular kinds of error.

(Vernon, “Computerized Grammar Checkers 2000,” 336)

Activity 4, Connecting the Grammar Checker to Instruction (Potter & Fuller)

- “We designed the four-month action research study to include direct instruction of the grammar checker and regular grammar instruction enhanced with use of grammar-check tools. Students first learned about the checker, its components and purposes, before beginning the agreed-on three gram- mar topics. Once into the units, lessons incorporated grammar check in a number of ways. Students composed or typed essays with the grammar-check tools turned off and on; they wrote sentences to “trigger” grammar-check error identification; they compared terminology and rules of grammar from text resources with those on the computer checker; and they explored the readability statistics, which report sentence length and the grade level of their writing.”

- “A favorite activity for the seventh graders was typing the textbook ‘pretest’ for the subject- verb agreement unit. Students then observed the grammar-check performance, reported their results, and hypothesized why the computer grammar checker may have missed or misdiagnosed an error. […]subsequent units students eagerly typed their assigned ‘pretest’ sentences, typed extra if they had time, and began hypothesizing at their individual computers about the accuracy of the grammar checker before the results were reported.”

- “Another engaging use of the grammar check allowed students to personalize their grammar experience by creating original sentence examples to challenge the checker: practicing examples of active or passive voice, creating possible subject- verb agreement problems, and changing simple sentences to compound or complex. Students watched the computer screens as the checker “reacted” to the sentences they created, and they compared and discussed the checker’s recommendations with their classmates.”

(See Reva Potter & Dorothy Fuller, “My New Teaching Partner? Using the Grammar Checker in Writing Instruction,” English Journal 98.1 (September 2008), 38)

References (and other interesting reading material)

Baldwin, Sandy. “Purple Dotted Underlines: Microsoft Word and the End of Writing.” Afterimage 30.1 (July/August 2002): 6-7.

Bray, Nancy. “Writing with Scrivener: A Hopeful Tale of Disappearing Tools, Flatulence, and Word Processing Redemption” Computers and Composition 30 (2013): 197-210.

Buck, Amber M. “The Invisible Interface: MS Word in the Writing Center.” Computers and Composition 25 (2008): 396-415.

Carr, Nicholas. “All Can Be Lost: The Risk of Putting Our Knowledge in the Hands of Machines.” The Atlantic (November 2013).

Curzan, Anne. Fixing English: Prescriptivism and Language History. Cambridge: Cambridge University Press, 2014.

Dale, Robert. “Industry Watch: Checking in on Grammar Checking.” Natural Language Engineering 22.3 (2016): 491-495.

Huh, Joohee. “Why Microsoft Word Does not Work for Novice Writers” Interactions (March/April 2013): 58-61.

Kemp, F. “Who Programmed This? Examining the Instructional Attitudes of Writing-Support Software.” Computers and Composition 10 (1992): 9-24.

Kostadinova, Viktorija. “Microsoft Grammar and Style Checker (‘Consider Revising’).” English Today 124 31.4 (December 2015): 3-4.

McAlexander, Patricia .J. “Checking the Grammar Checker: Integrating Grammar Instruction with Writing.” Journal of Basic Writing 19.2 (Fall 2000): 124-140.

McGee, Tim & Patricia Ericsson. “The Politics of the Program: MS Word as the Invisible Grammarian.” Computers and Composition 19 (2002): 453-470.

Pinsker, Joe. “Punctuated Equilibrium: Will Autocorrect Save the Apostrophe, and Slow Language’s Evolution?” The Atlantic (July/August 2014).

Potter, Reva & Dorothy Fuller. “My New Teaching Partner? Using the Grammar Checker in Writing Instruction.” English Journal 98.1 (September 2008): 36-41. Available online from National Writing Project resource page.

Vernon, Alex. “Computerized Grammar Checkers 2000: Capabilities, Limitations, and Pedagogical Possibilities.” Computers and Composition 17 (2000): 329-350.

Whithaus, Carl. “Always Already: Automated Essay Scoring and Grammar-Checkers in College Writing Courses.” In Machine Scoring of Student Essays: Truth and Consequences, edited by Patricia F. Ericsson and Richard H. Haswell. Logan, UT: Utah State University Press, 2006. Available online from National Writing Project resource page.

Want to suggest a new tool, teaching activity, experiment or reading?

Please use the Comments section below or flick me an email.

[1] Part of Microsoft Office Proofing Tools, the grammar and writing style options vary from MS Office version to version. At work, I am using MS Office 2013, at home – Office 365. Acknowledging the potentially wide range of versions currently in use by all of us, and for the sake of simplicity, I will be referring to Microsoft’s grammar and style checker as MSGC.

[2] Describing the affect of his newly-acquired Malling Hansen “writing ball”, Nietzsche typed in a letter to Peter Gast in February 1882, “Unser Schreibzeug arbeitet mit an unseren Gedanken,”or “Our writing tools are also working on our thoughts.” These lines have been quoted by Friedrich A. Kittler in his Gramophone, Film, Typewriter, transl. and introduced by Geoffrey Winthrop-Young and Michael Wutz (Stanford, CA: Stanford University Press, 1999), 200; and by Darren Wershler-Henry in his wonderfully quirky book The Iron Whim: A Fragmented History of Typewriting (Ithaca, NY: Cornell University Press, 2007), 51.

[3] Vernon is referring to an article by Glynda Hull, Carolyn Ball, James L. Fox, Lori Levin & Debora McCutchen, “Computer Detection of Errors in Natural Language Texts: Some Research on Pattern Matching,” in Computers in the Humanities 21.2 (1987): 103-118.